TL;DR

- A post test conversion window can help account for lagged effects of your advertising efforts.

- These can be very useful when measuring conversions with long purchase cycles or when trying to determine an appropriate cooldown period between campaigns.

- To determine the duration of a post test conversion window, we can monitor the treatment effects between test and control after the experiment ends until the two reconverge within a region of practical equivalence (ROPE).

Introduction

Marketers are often running GeoLift experiments to assess the impacts of their advertising efforts. However, advertising effects are rarely instantaneous. Certain actions have longer conversion cycles and will require continued monitoring after campaigns complete to assess their impacts in full. We explore the usage of post test conversion windows to measure these lagged effects and some of the considerations advertisers should take when thinking about using them.

What is a post test conversion window and why do we use them?

A post test conversion window is an interval of time after the completion of an experiment where we continue to track conversions to account for delayed impacts of our advertising efforts. If the time it takes for our customers to take a desired action significantly lags when they view our advertisement, we can append this window and monitor our desired event after the experiment concludes.

Post test conversion windows can also be used as a cooldown period between testing different strategies. They help us run cleaner experiments knowing we are closer to steady-state performance without the lagged effects of other campaigns.

Jasper’s Market, a fictional retailer, needed to understand the value of their upcoming marketing campaign. They plan on running a month-long campaign and know they have a typical purchase cycle of three weeks. In other words, it takes their customers an average of three weeks from when they see an advertisement to physically going to the store and making a purchase. Jasper’s Market felt that they would not be able to fully capture the impact of their marketing efforts by just measuring when their campaign is live, so they decided to append a post test conversion window to their experiment to measure the delayed effects of their campaign.

When should we include a post test conversion window?

When deciding to include a post test conversion window, we are balancing two different forces. On one hand we want to incorporate the lagged effects of our advertising efforts, better representing the complete impact of the campaign, while simultaneously trying to avoid introducing noise into the experimental analysis and potentially diluting the impact of our campaign.

To balance this in our favor, it makes sense to incorporate a post test conversion window when the marketing campaign effects we are measuring occur well after the advertisement is delivered. The shorter the duration of the campaign relative to the time it typically takes for the conversion to occur (purchase cycle) the more likely we will benefit from including this window.

How do we determine the optimal length?

We can start by fixing the post test conversion window to the duration of our typical purchase cycle. We can then measure the daily incremental conversion volume or average treatment effect on treated (ATT) over both the test period and the post test conversion window to determine the incremental impact of the test.

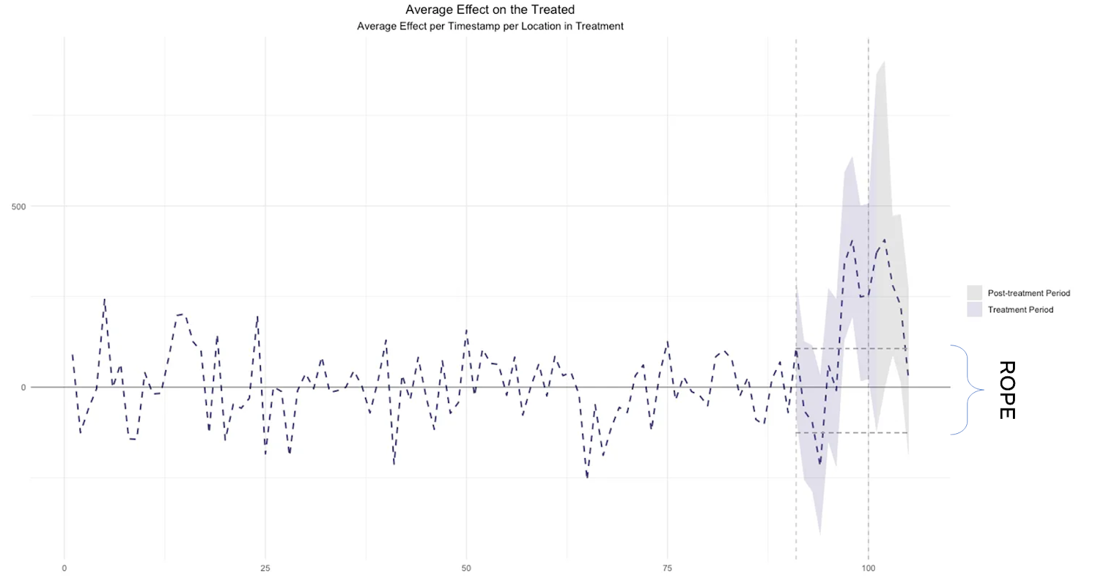

To determine the optimal duration for future tests, we can take note of how long it takes for test and control geographies to reconverge after the campaign treatment ends and set that as the duration going forward. In some instances, the test and control populations might not reconverge exactly. For these cases, we could determine a ‘Region of Practical Equivalence’ (ROPE) for the ATT and if the discrepancy falls within that range, we can change the post test conversion duration. If the discrepancy is large however, we should inspect the individual geographies and ensure no unusual events occurred within any of the test or control locations and possibly repeat the experiment before assuming the effect goes into perpetuity.

Jasper’s Market decided to launch their geo-experiment with a post conversion window of three weeks. By doing this they ensure they will capture the lagged effects of their marketing efforts. After the experiment ended, they continued monitoring their ATT. As they had suspected, the gap between test and control did not immediately close after the experiment but continued for an additional two weeks before reconverging back to a negligible level. Had they stopped monitoring the results after the end of the campaign they would have fully captured the impact of their efforts. Having the post conversion window also helped them identify the amount of time needed after the campaign ended to run their next experiment without the fear of contamination.

Viewing Post Test Conversion Windows in GeoLift

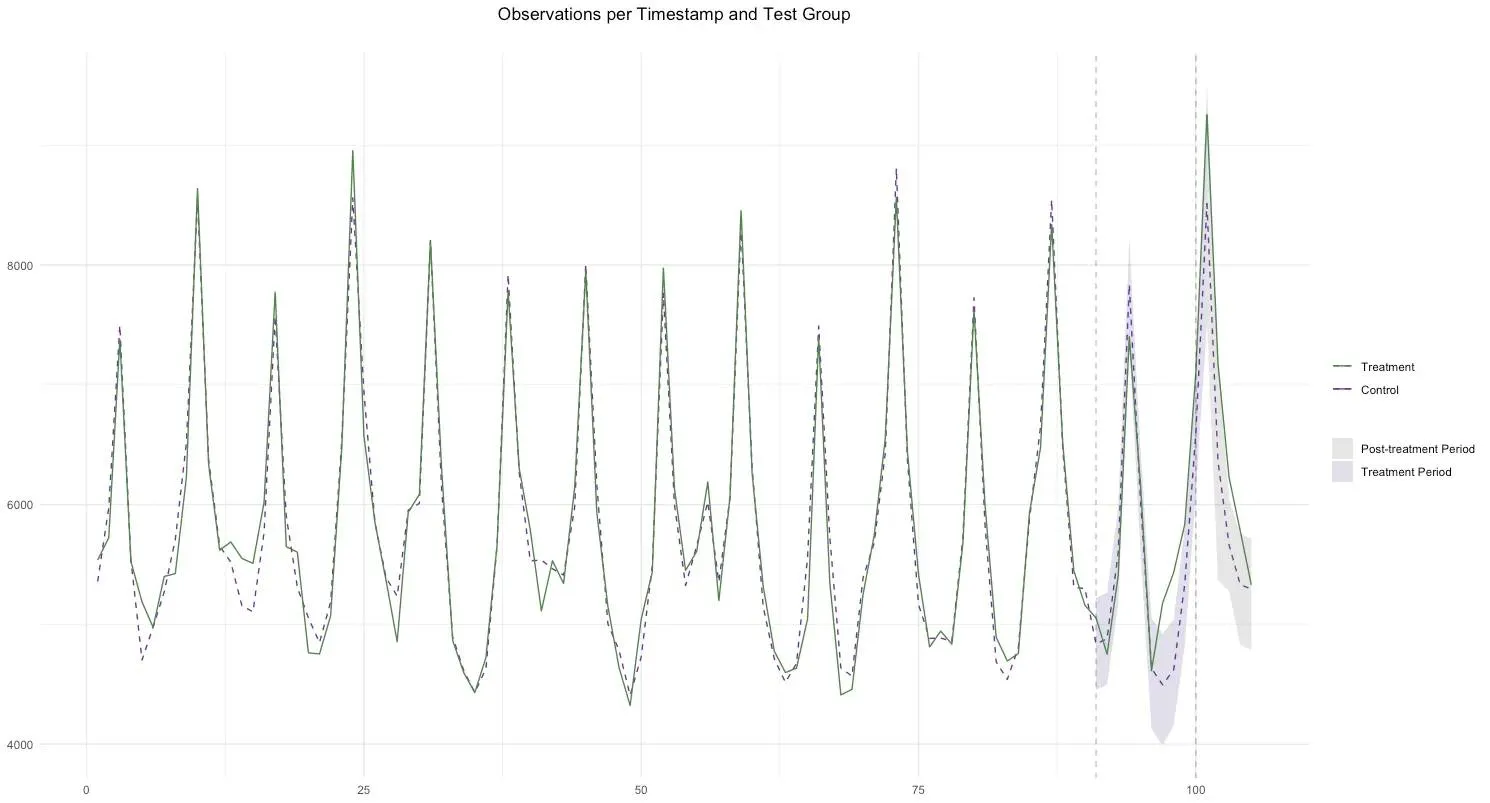

We recently added a small change to version 2.4.32, which allows the user to input the number of periods included as a post test conversion window. This update has been added to both the absolute Lift and the ATT plots. Within Lift you can include a ‘post_test_treatment_periods’ which will allow you to view the Treatment and Post Treatment periods in different colors.

Additionally in the ATT plot you can also include the ‘post_test_treatment_periods’ and a ROPE = TRUE parameter which will delineate the post_treatment window and show you the region of practical equivalence defined as between 10% and 90% quantiles for the ATT prior to the experiment.

Final Thoughts

When measuring the impact of your next advertising campaign, consider adding a post test conversion window to capture the lagged effects of your marketing efforts. The longer the purchase cycle for your product the more crucial it is that you incorporate one in your analysis. In addition to helping with measurement the cooldown period will also ensure you are ready to launch your next experiment on a blank slate without contamination of your prior campaign.

What’s up next?

Stay tuned for our next blog posts, related to topics like:

- Calibrating your MMM model with GeoLift.

- When should I use Fixed Effects to get a higher signal to noise ratio?

- When should our GeoLift test start?